Eight Commandments for AI: A Consumer's Perspective

Preramble

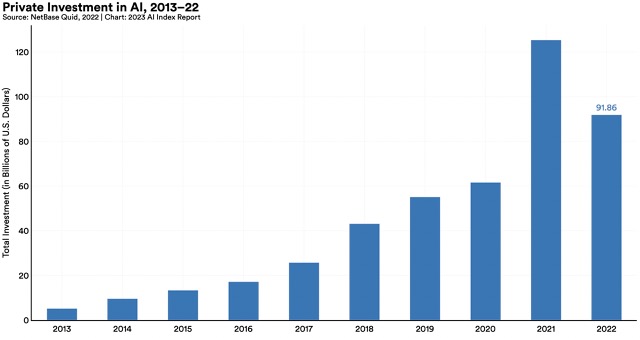

Between 2013 and today, at least $443 billion has been invested to bring AI to every imaginable corner of our lives, our workplaces, our families, and our devices.

(Source: Stanford AI Index1)

In the same timeframe, somewhere around $335 million was invested in “AI existential risk research” – trying to figure out how to keep AI from destroying civilization.2 It’s 1000x less than the venture money, but still a pretty hefty sum.

But how much money is allocated to simply ensuring that AI doesn’t make us dumber? Or more isolated, or more depressed?

I don’t know. Maybe a few million in charitable donations and university research grants and congressional hearings?

This seems to me a fundamental imbalance. We know that every technology has uses that amplify our human values and uses that degrade our human values. But the big money that’s backing technology doesn’t – can’t – differentiate between the two. After all, our human values are ambiguous and fuzzy and conflicted.

With AI, I think we’re left hoping for a lucky coincidence. We’re hoping that AI will be more like a bicycle for the mind (as Steve Jobs famously analogized3) – than, say, a car for the mind.

What do I mean by that? I mean that the bicycle is, on net, very clearly a positive invention for humanity. It’s not expensive and yet it expands freedom considerably. You can go farther with less effort. Kids can participate. It runs on fully renewable, clean human energy.

Cars are a different story. There’s at the very least a strong case to be made that personal cars have been, on net, bad for humanity.

They kill more than a million people every year. They make even the most peaceful neighborhoods inherently deadly, most tragically for children. They spread us apart. They pollute smoke and oil. They are fundamentally at odds with the urban cultures that produced all of our great towns and cities. Car traffic is grawing away at the soul of the American Worker. And making us obese. Etc.

I’m not saying that cars are unilaterally bad. But if we’d had known about all the death and urban sprawl and parking lots and traffic, maybe we’d have said “Not so fast!” if we somehow had that collective capacity.

Maybe we’d have allowed interstate highways and freight transport for the sake of global commerce, but kept cars out of our urban cores and away from our kids and our walkways.

There were voices of restraint. Lewis Mumford (of the New Yorker and The City in History), Benton Mackaye (creator of the Appalachian Trail), Clarence Stein, and their colleagues sounded the alarm way back in the 1920s and 1930s about the spectre of car culture and urban sprawl. They even managed to build car-resistant communities before it was too late.4

But cars won. Clearly. Do we stand a chance against AI?

Sure, governments will come and make some rules. They’ll do the equivalent of mandating seatbelts, which is good. But they won’t – and can’t – guide the use of technology towards human goodness and away from human decay. That’s up to us. Can we do better?

Let’s be enlightened consumers. Let’s say ahead of time: we know for a fact that AI can be used in ways that are corrosive to society. Let’s declare our intent to not be parties in enabling that destruction.

What kind of destruction?

I am not referring to AI autonomously killing people.

I’m also not talking about the “ask an AI to go steal some credit card numbers for me” use case. We all agree we don’t want to enable that. Governments and self-regulation will at least try to deal with trying to suppress clearly illegal usage.

I’m talking about the exact use cases that the Marketing Team might mention in their ads. The equivalent of a Pontiac ad in 1940 highlighting the safety of their deadly machines.

It would be good if we didn’t let ourselves be fooled.

So, with no further ado, I’ve written up the Eight Commandments of AI. I don’t exactly expect that anybody will pay much attention to these. But if they did, and we found a way to collectively stand by them (or some better version of them), we could – maybe, just maybe – we could be something other than fuel for this freight train, for this mega-machine, as Mumford described it.

The Eight Commandments of AI

1. Thou Shalt Not Allow AI To Make Thee Dumber

GPS apps have made us less capable at wayfinding.5 How do we make sure that AI doesn’t make us less capable at thinking?

The Boston Consulting Group did a study recently on the way that people work with AI. The AI (GPT-4) made them 40% better at generating new business ideas but made them 23% worse at business problem-solving.6

But here’s one troubling finding:

Digging deeper, we find that because GPT-4 reaches such a high level of performance on the creative product innovation task, it seems that the average person is not able to improve the technology’s output. In fact, human efforts to enhance GPT-4 outputs decrease quality. (See the sidebar on our design and methodology for a description of how we measured quality.) We found that “copy-pasting” GPT-4 output strongly correlated with performance: The more a participant’s final submission in the creative product innovation task departed from GPT-4’s draft, the more likely it was to lag in quality. (See Exhibit 6.) For every 10% increase in divergence from GPT-4’s draft, participants on average dropped in the quality ranking by around 17 percentile points.

Another finding: the performance boost for using GPT-4 was much higher (43%) for average performers than for top performers (17%).

Extend this out and we can at least imagine a world where there are massive incentives for average workers to simply copy-paste from their AI and no incentive to learn the kind of creative thinking skills that could turn them into top performers. And not just at work. What if this happens at school, too? (See Commandment Six)

I know that several high-profile teams are working in omnipresent wearable AI devices. Sam Altman of OpenAI seems to be in the middle of at least two of them.7 Whatever the form factor, we’ll soon all have something like ubiquitous ChatGPT available at a button press. It will be dangerously easy to ask it to do more thinking for us. To write notes to our loved ones. To build a meal plan and write grocery list. To ask us for career advice. To come up with a poster slogan for tomorrow’s protest. To plan out our weekend.

The Marketing Department will invite us to welcome these innovative changes. They’ll confidently assert that all the AI magic will free up our mental energy to take on new, higher, more enlightened, more creative mental work.

But what if it’s like the car that frees us from the work of walking or biking, only to leave us stuck in traffic?

What’s the analogy?

Car is to traffic as AI is to social media.

What if people have to think even less, and so they have even more time to spend on TikTok in the Metaverse?

I’ll let you decide if that’s a brave future that would make our ancestors proud of how far we’ve come.

We have to think in order to be creative. We have to know things, like, in our real brain memory, in order to think.8 Yet we’re going to be told that we don’t need to think and we don’t need to remember because the AI will do it for us.

2. Thou Shalt Reject AI-generated Art

Maybe I’ve missed the argument for what’s going to be fun about AI-generated novels and images and movies.

But I see this as an extension of Commandment One. When we ask AI to generate creative content for us, we lose the chance to exercise our own creative capacities. We submit to the statistical average, as encoded in the AI. We debase the human spirit.

This sort of gets to the very heart of the purpose of art.

Do we create art in order to express our humanity, or in order to produce an artifact that is statistically similar to other artifacts? There is only one right answer!

In fact, when AI is substantively involved (when it generates its own ideas), we can no longer call it art. We can only can it “content.”

3. Thou Shalt Protect the Future from the Generative Content Downward Spiral

There’s probably a better name for this. It’s the thing that happens when new AI is trained increasingly on AI-generated content from the previous generation. If AI-generated content is lower quality (at least in some key dimensions like empathy) then you could get a downward spiral, where each successive generation is worse than the previous, in the way that photocopies lose resolution every time you copy a copy and there’s no telling which was the original.

As an individual, there is not much to do with this problem. But collectively, we do need to actively curate content spaces that are untainted by generative AI. This is exactly like curating museum collections.

4. Thou Shalt Reject Tranformation For Its Own Sake

As AI advances, we will see how it will transform nearly every aspect of our lives. In ways that will seem unimaginable right now.

Imran Chaudhri, CEO of Humane, maker of the AI Pin9

I don’t want my life to be transformed. I don’t want my work to be transformed. I want them to be improved. Transformation is not a selling point; if somebody tries to sell me something because “AI will transform the world” – my reaction is to resist. That sort of advertising is fundamentally either a fear tactic (“Buy my product so you don’t get left behind by the other people who did buy my product”) or appeal to the crudest form of consumerism (“If it’s new, it must be good!”).

5. Thou Shalt Shun AI Companions

We only have so much time in the day to devote to intimate relationships. It’s either with AI or with people. This is a zero-sum thing.

Seeing AI replace human intimacy seems to me to be part of the process of the collapse of civilization.

6. Thou Shalt Keep School Relevant

My kids are in elementary school. From now until at least college, AI will be able to do all their homework and pass all their tests.

I see how tempting it is for my kids to plug their homework into ChatGPT or Phind. They succumb sometimes. I bet there are a lot of people who succumb most of the time. Maybe even all the time.

It’s the Golden Age of cheating.

How on earth do we fix homework? Especially for writing.

My take is that we do need to get much better at creating AI-free spaces for our kids, especially at home. Like, the public school district should offer free services to help parents establish firewalls and device management policies on home devices and networks. This is a public good. We can’t afford to create a generation of AI-addicted cheaters.

7. Thou Shalt Not Allow AI to Write on Thy Behalf

I see the world of AI-generated PowerPoint and AI-generated email around the corner. It’s already happenning.

The natural counterpart is AI-generated summaries of our incoming emails and documents.

So there’s an absurdity where human communication proceeds as follows:

- Human A writes bullet points for a presentation/email/document

- Human A’s AI turns these bullet points into a complete document

- Human B receives the document and asks AI to summarize it

- Human B receives document summarized in bullet points

Which, of course, could have been done more succinctly by having Human A simply send her bullet points to Human B.

It seems to me that we’re at a big risk of simply losing trust in the human provenance of all digital text. And then what? Maybe we go back to a pre-literate world. (Socrates would be smiling10)

8. Thou Shalt Guiltlessly Embrace AI For Low-Risk Search

I’m not trying to give AI a hard time. It’s a miracle. In particular, it’s a godsend when one is searching for statistically plausible answers to questions where correctness is not essential.

For example, I sometimes use AI to suggest code when I’m programming. It works better when there’s a known answer – some very similar code has already been written – and the AI applies a little special sauce to customize for my request. That can be a nice time-saver.

Here are some questions that I would very happily farm out to an AI:

- “Where are my car keys?”

- “How do you replace an engine filter on a 2006 Volkswagen Rabbit?”

- “What’s the difference between the single and double quote in Bash

- scripts?”

- “Which alternative to copper wires has the best price/performance?”

- “How do you say ‘I want a mushroom’ in Italian?”

This is just a starter list. There an unlimited number of these kinds of questions, of course. Generative AI’s power to reliably answer these questions is great – nay, positively magnificent.

The current Transformer archicture came from the world of automated translation at Google. And I suspect there’s a whole category of translation questions where this stuff would really shine. For example, the sites that take technical articles and “translate” them for younger kids. Or maybe more abstractly, take a book on software project management and “translate” it for agriculture or farming applications. I could imagine some productive cross-pollination where different professional subcultures translate their work for each other. “Translate the textbook on Civil Engineering into Marriage Counseling by abstracting the principles and using the bridge as a metaphor for a relationship.” That all sounds like interesting, fair game.

Disclaimer

OpenAI has a mega-billion-dollar valuation, so people are using it for something, and what do I know? I’m probably missing an untold number of interesting and positive use cases.

Notes

-

https://aiindex.stanford.edu/report/#individual-chapters ↩

-

https://www.lesswrong.com/posts/WGpFFJo2uFe5ssgEb/an-overview-of-the-ai-safety-funding-situation#Open_Philanthropy_Open_Phil ↩

-

See https://www.inc.com/nick-hobson/microsofts-satya-nadella-challenges-a-key-concept-from-steve-jobs.html. Apropos to this writing, Satya Nadella proposes to update the metaphor ad absurdum: that AI should be more like a steam engine than a bicycle. Imagine, for a moment, a world in which you traveled to the park on a personal steam engine. ↩

-

For a good overview, see https://mitpress.mit.edu/9780262690096/toward-new-towns-for-america ↩

-

https://www.washingtonpost.com/national/health-science/by-relying-on-gps-devices-are-we-literally-losing-our-way/2018/11/30/dd9eb6ae-e9bd-11e8-bbdb-72fdbf9d4fed_story.html ↩

-

https://www.bcg.com/publications/2023/how-people-create-and-destroy-value-with-gen-ai ↩

-

https://www.theinformation.com/articles/one-ai-device-to-rule-them-all ↩

-

I like this book’s explanation of the idea from the perspective on an educator: https://www.coreknowledge.org/product/cultural-literacy-every-american-needs-know/ ↩

-

https://www.ted.com/talks/imran_chaudhri_the_disappearing_computer_and_a_world_where_you_can_take_ai_everywhere/transcript ↩

-

https://fs.blog/an-old-argument-against-writing/ ↩